Making of glossr

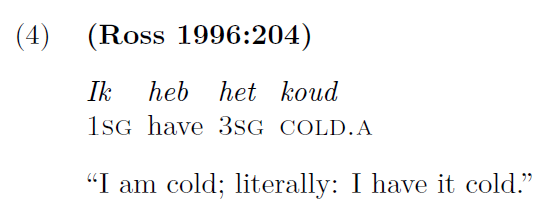

A few weeks ago I started working on a side-project (as I often do) which became glossr: an R package that allows you to insert interlinear glosses in an R markdown file to render them in HTML, PDF and Word1. Interlinear glosses, in case you don’t know, are a way of printing examples in a language different from the language of your text, rendering both a free translation of the example and “word-by-word” translation with morphological information. I haven’t used them much myself, but I would love to. What really kicked this off was talking to a colleague, Giulia Mazzola, one of the first people to get really excited when I told her about R Markdown. I convinced her to write her PhD thesis in R Markdown and she, in turned, encouraged me to write blogposts about it and also inspired other colleagues. However, glosses were proving challenging enough that she was considering getting back to MS Word (the heresy!).

I had to do something about that! More importantly, I knew I could. One of her problems was that she could only have LaTeX output and it wouldn’t render in HTML. Another was that styling a large piece of text within a gloss required her applying LaTeX formatting to each word individually, which was exhausting. Not to mention (and this is my motivation for so much code that I write) that the more you type the more chances you have of getting typos.

The easiest issue to solve was the latter: a function that takes a string and a LaTeX formatting instruction and applies it to each word (unless joined with brackets). This became glossr::gloss_format_words(). The real challenge was to find a way to have equal R input that rendered both in HTML and PDF (Word came later, encouraged by Thomas Van Hoey). And here is when it gets interesting.

Ingredients to solve a problem

Problem solving involves a mix of creative thinking and a rich enough toolkit. The more tools you have, the more ways you can find to achieve a goal. The catch, I find, is that in order to really get those tools —to feel comfortable with them, to be able to connect them to their tasks— you need to encounter problems they can solve. So, you need tools to solve problems, and you need problems to acquire tools. This is a challenging issue, but not without solution :)

The typical approach is to take courses and read materials. You go online, for example, and sign up for an introductory course in R. Or you go check a book or a blogpost and see what you can learn from them. The examples in the courses and books are not always going to be examples you identify with. They will show you how to open a file, how to measure the length of an object, how to sum 2 and 2 or write “Hello World”. At that moment, you may not really see the point, either because you don’t have pressing issues that can be linked to these examples, or because your wishes are so ambitious that the link looks weak anyhow.

Another approach that I personally like is to take detours to solve seemingly useless, but present and personal problems. One day, you will have some small issue that you can live without solving. Say that you are writing “Hello World” and you want World to be green. That is currently beyond your knowledge and your pressing needs —it’s just a wish born out the creative fire in your heart. You don’t really need to, but you want to, and you know it’s possible. So you take an insane amount of time researching how you can color a word in green in the context in which you are writing. Maybe it’s as easy as selecting the word and clicking on a button. Maybe you have to write some code around it. Maybe it is not supported at all. But after a while, you will know. And another day, later, you will indeed need to color a word —maybe in green, maybe in another color— and your past detour will allow you to go about it faster this time around, and even to explore more challenging tasks that build on the block you accidentally added to your skills. Or, say, you bought vanilla essence to use one drop for those muffins you wanted to try… and you still have it a few weeks later when you want to try something else. I realize that this approach to learning requires you to have the time and the resources to take detours and explore things that might take longer than you expect without impressive results. It’s an investment that looks like a bet and it’s hard to convince yourself and whoever you owe your time to that it is, indeed, worth it. I hope you can, because it feels awesome.

My story with glossr mixes both approaches to learning in an interesting way. I didn’t have all the technical knowledge I needed to solve my problems, but I was determined to do it one way or another. (And I decided that if I didn’t have some sort of “satisfying” answer after a day, maybe two, I’d give up. It mostly works.) What I did have was a strong scaffolding or previous knowledge that I acquired by taking detours for fun, which often involved reading books. Of course, the first steps were taken with online courses and books.

First of all, I knew how to create a package, how to create its pkgdown site and even how to create a hexagon sticker. I had done all that before for another package with a more narrow audience. And for that package, I had had to learn pkgdown and how to create hexagon stickers, but I already knew how to start and organize a package because I’d tried it with code of mine that nobody else will ever see. Back then, I had mostly read Hadley Wickham and Jenny Bryan’s R packages book. I also knew how to add citation information and a DOI from yet another project. Spending time on these skills before meant less of a load in this case. My great old efforts paid off in projects I hadn’t even imagined back then. As a consequence, I could also focus on best practices that I had not implemented in my previous projects: I actually read the tidyverse style guide, I started up a NEWS.md file, I wrote lots of tests and did my best with version control. When you start writing your package, or any other such project, you might find that there is just too much to learn: how to do it, how to do it well, how to maintain it… tasks that give you a result and others that might make it prettier or more useful or more clear, but you don’t really know how to approach the task anymore. I find it important to give ourselves space to learn by pieces, mastering some and messing up the others, slowly adding blocks to your skills and forgiving yourself that some blocks are missing. Especially if you do this alone and have to handle everything only in your head.

In second place, I had enough R knowledge to solve some of the challenges and to know how to look up other answers. I was familiar enough with other tools to think that there was, indeed, a solution to the problem, and that it was within my reach, even if I had to stretch quite far. To make this more concrete, let’s break up the problem, more or less like I did.

Mise-en-place

After gloss_format_words() was done (with another name back then), the main task was to write such a function that would output PDF glosses or HTML glosses depending on the output format of the R Markdown file. In a way, this can be split in three parts. First, you need a function that takes some gloss (or its meaningful parts) as an input and returns the LaTeX version. Then, you need a function that takes the same kind of input but returns whatever you need for HTML. Lastly, you need a function that knows whether your output format is PDF or HTML and calls the appropriate conversion function. The happy news was that I already knew a function that told you the output format of an R markdown file (knitr::is_latex_output() and knitr::is_html_output()) thanks to playing with output-specific features for my PhD dissertation. I also had an idea of how to create the LaTeX output: I knew how to write glosses in LaTeX, which involved a rather fixed template. I just had to take the right pieces and put them inside the template (e.g. with sprintf()), and then you could call the function from an R Markdown chunk with results = "asis" and it would work. I knew how to do that because one adventurous past self once decided to generate many plots in a loop programmatically, that is, instead of copy-pasting the plotting function for each of the datasets I wanted to plot, I ran a loop that created and printed them automatically. You just need to get familiar with cat() and use results = "asis" in your R chunk.

You see the pattern, right? Each of the small pieces of knowledge that I used to quickly solve the LaTeX problem took me a lot of time and effort in a previous project, in which I didn’t know if they would be useful in the future. The link is not even that obvious: from solving how to print plots in a loop to rendering glosses in a specific format! But if I hadn’t explored those skills back then, creating glossr would have been an even greater challenge —or, more likely, I would never have thought it within my reach at all.

So, I tried out my pet function for LaTeX output in a simple R Markdown file and was very, very happy when it worked. I also checked what happened if you applied it to a number of rows of a table (using purrr::pmap(), which I learned in yet another old exploration), and they were printed very neatly one after the other. That way, the user could have a central table with their examples, maybe in a file that can be reused in different projects, and very easily select the examples they want to print. Brilliant!

But. HTML. I didn’t even know how to create glosses in raw HTML, let alone convert it from R. I looked it up online, and all the forum discussions were pits of despair, and what I did find was not clear enough or did not solve my issues. Should you use tables? Should you use lists? How much work is it and is it readable by screen readers?2. I did not find either lingglosses (which uses tables) or leipzig.js (which uses nested lists and is the current default implementation in glossr) at this stage. I think I spent a whole day just trying to crack this puzzle. I felt quite confident with HTML and I knew some Javascript, although not really in conjunction with R. After turning the problem in my head for hours, I thought of a lateral solution, which can be implemented with glossr::use_glosses("tooltip"). I had to rephrase my problem: I didn’t necessarily want to print interlinear glosses in HTML, just allow some text to be rendered; after all, you wouldn’t publish a paper with HTML, right? And maybe it wasn’t necessary to have everything visible at once, maybe the morphological annotation could be coupled to the original text in a different way, taking advantage of HTML-specific features that were inapplicable in other formats. So, tooltips. For a whole day, at least, I was convinced that it was a good solution, and I just had to figure out how to implement it. I found the tippy R package, which implements tippy.js, and I thought that it would be awesome to use a singleton tippy with transition.

It took me hours of tinkering with the code until I gave up. I could render tooltips with tippy, but not the singleton with transition, and it was always under the number of the example instead of next to it, which drove me crazy3. In the end I looked up how to create simple tooltips and implemented them in a few minutes. The code that didn’t work to align the tooltips with the examples seemed to work well enough with this one, which was so much easier to implement in the end. You would think at this point that I wasted hours trying to figure out tippy and all was discarded when I found a simpler way, but that that would be a short-sighted judgement. In fact, while digging into the guts of tippy I learned of cool htmltools functions I took for granted in my experience with shiny or I had never even heard of, such as HTMLDependency() and tagList(), and they were crucial for the next steps.

Speaking of next steps, I seem to have a tendency to move on from one challenge to the next without taking a break to celebrate my achievements. At every success, like when I had the tooltips running, I immediately found something else to focus on, like unifying the cross-reference call for both LaTeX and HTML examples. Instead of, I don’t know, patting myself in the back? Standing up and jumping in joy? Thinking back and appreciating how I had finally solved a problem with knowledge I did not have two hours prior?

At this point in the story I have a function that takes pieces of glosses and, depending on whether your R markdown will knit to HTML or PDF, it renders tooltips or gb4e glosses. It requires you to import`gb4e and to add a little bit of Javascript at the beginning of your report, and it renders the HTML with tooltips instead of classic glosses, but it works. I send the script to Giulia.

Yes, the script. It wasn’t a package yet.

Into the oven and beyond

Only after Giulia shared her joy and enthusiasm with my script —even though the HTML output was not ideal— did I start fleshing out the package itself. I looked up whether the name I wanted existed, by googling and with available::available(), read the style guide to already start with nice practices (e.g. I had to change all my camelCase functions to snake_case), and started up the R project for the package. This meant (1) organizing my functions into coherent files, (2) naming them in a coherent and clear way, (3) adding clear documentation and (4) writing useful tests. Unlike in my previous incursions into R package development, I was designing it for others. I had to make sure that other people, even people who don’t know me, who won’t just send me an email when something breaks, can go and use it. I had to make it portable, user-friendly, sustainable. The tests should help me keep the important functionality going even if I made important changes to the code and, after adding the Word output, I must say they have paid off.

I found leipzig.js while I was turning the first script into a package. I loved the output, the user-friendliness of the input, the flexibility. The HTML itself was easy to write; the challenge was including the Javascript into the R markdown. This was not so challenging in itself… the real challenge, that I imposed myself when thinking of potential users, was to include the Javascript from the package, rather than asking the user to type <script src="..."></script> at the beginning of each file in which they might use glosses. The same went for PDF: I wanted to call gb4e automatically, not depend on the user remembering to call the package. More importantly, I knew that this must be possible, simply because packages like kableExtra exist. R markdown itself and most packages that build on it call Javascript scripts, CSS files, LaTeX packages, etc., without requiring you to type anything extra. I was inspired by xaringanExtra’s instructions, though, to start up with glossr::use_glossr().

The tools I needed to include this extra code were not in my toolkit before I started writing the package. Between some googling of problems and exploring the GitHub repositories of packages that seemed to have solved similar issues, I found what I wanted. What does xaringanExtra::use_xaringan_extra() actually do?, I asked myself, and went to check the code. It turned out, it relied on the same mystery htmltools::HTMLDependency() I had seen pop up in tippy. I also found, in an issue in the bookdown repository, someone reporting that rmarkdown::latex_dependency() didn’t work properly, which allowed me to learn (1) that the function existed, (2) what it did and (3) how to use it correctly. It also showed me the magical knitr::asis_output() function, which meant that I could simulate, from the package, the output that you would have in a chunk with results = "asis" so that the user wouldn’t have to! BRILLIANT!

Looks like everything was going smoothly, right? It was not. The LaTeX version worked, but when I was testing out the HTML version in the vignette, R Studio crashed. I had to use the Task Manager to reset it, so bad it was. I couldn’t debug it in any meaningful way. I just looked back and forth at my code and the uses of htmltools::HTMLDependency() online to find what I was doing wrong, and I couldn’t. If there was a typo, it was hiding very well (to be fair, I ran into many bugs because I kept typing leizpig instead of leipzig). It was late at night (midnight is late at night for me), I had been at it for hours, and at some point I had to admit that my brain was not going to be able to figure that one out before going to bed. It was a good call. The next morning I tried testing the code in a new, smaller R Markdown file with only one gloss and it worked. Therefore, the issue was not in my use of htmltools::HTMLDependency() but in the combination of multiple glosses! Somewhere else entirely! Luckily, I knew enough of Javascript to make a reasonable hypothesis about the problem. It turns out, leipzig.js needs both an independent script and a small Javascript call in your HTML file, which has to show up after the other script. My R code was calling both the script and the extra function each time it rendered a gloss, and that was messing up the order. I tested the hypothesis by identifying the first gloss, calling both things there, and then removing either the script, the extra function or both from all the other glosses. Once I found the combination that worked, I applied it, and problem solved!

Debugging is not an obvious task. You learn how to do it by failing and trying, by thinking of messes you’ve encountered before, by breaking the code into small pieces and exploring each of them, reconstructing it until it breaks again. And, like with science in general, you make a hypothesis about where the problem lies and how to solve it… and if it doesn’t work, you still have to see if the failure was in the proposed solution,or in where the problem was in the first place.

With this, I had the first version of glossr. I tried it in another project of mine, run into the gb4e issue that requires \noautomath and learned how to add it programmatically via the already implemented rmarkdown::latex_dependencies() call (yeey!). It’s great to know that something like this will probably come in handy in some foggy future I cannot yet conceive of, because now4 that glossr uses expex instead, \noautomath is not necessary anymore.

So, glossr 0.1.0. Uploaded to github, and then thinking up fun ideas for logos, doodling a bit, trying out my idea in Krita to then combine it with hexSticker and setting up the pkgdown site with some vignettes, links, thoughts. But this is just the start. I look forward to people using the package and maybe helping me make it better. As a package user —more than package developer— I myself find it strange to think that my input matters, that I have a voice that can help steer a tool I use in a certain direction. But as I start creating things that others can use, I really want to hear what they think, because maybe there is a little something I can still do that could mean a lot to them. I like that.

The Word functionality is in development but it will be published soon!↩︎

I actually don’t know how friendly the current output is for screen readers, I would love to talk to someone who uses them and learn.↩︎

It’s amazing that I spent hours trying to fix that, but once I found leipzig.js and the number was next to the translation instead of the top, I was happy enough.↩︎

Coming soon.↩︎